Overview

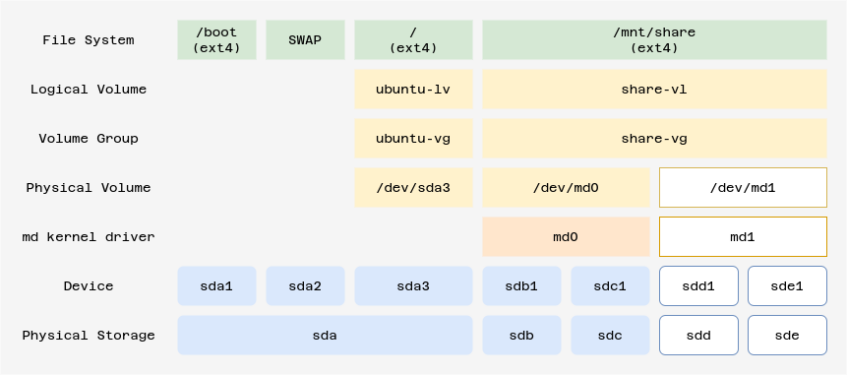

既存の LVM Volume Group に新に追加した RAID 1 Array (md1) を追加する。

Storage Diagram

Reference

Environment

# uname -a

Linux nas 5.15.0-58-generic #64-Ubuntu SMP Thu Jan 5 11:43:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

# cat /etc/os-release

PRETTY_NAME="Ubuntu 22.04.1 LTS"

NAME="Ubuntu"

VERSION_ID="22.04"

VERSION="22.04.1 LTS (Jammy Jellyfish)"

VERSION_CODENAME=jammy

ID=ubuntu

ID_LIKE=debian

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

UBUNTU_CODENAME=jammy

# Summary

RAID

LVM

Check Storage

# fdisk -l

Disk /dev/loop0: 61.96 MiB, 64970752 bytes, 126896 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/loop1: 79.95 MiB, 83832832 bytes, 163736 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/loop2: 49.83 MiB, 52248576 bytes, 102048 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/loop3: 63.27 MiB, 66347008 bytes, 129584 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/loop4: 111.95 MiB, 117387264 bytes, 229272 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk model: VBOX HARDDISK

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: FA6120DA-07AF-4E7F-BAFD-B3F929BBFFCF

Device Start End Sectors Size Type

/dev/sda1 2048 4095 2048 1M BIOS boot

/dev/sda2 4096 3674111 3670016 1.8G Linux filesystem

/dev/sda3 3674112 20969471 17295360 8.2G Linux filesystem

Disk /dev/mapper/ubuntu--vg-ubuntu--lv: 8.25 GiB, 8854175744 bytes, 17293312 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk model: HARDDISK

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: F2A8B7FB-DA02-4B16-9B13-D4AC1AAC69C5

Device Start End Sectors Size Type

/dev/sdb1 2048 20969471 20967424 10G Linux RAID

Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk model: HARDDISK

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 088CEDE5-A558-485C-9F7C-97C8EBF6D20F

Device Start End Sectors Size Type

/dev/sdc1 2048 20969471 20967424 10G Linux RAID

Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk model: HARDDISK

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sde: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk model: HARDDISK

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/md0: 9.99 GiB, 10725883904 bytes, 20948992 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/share_vg-share_lv: 9.99 GiB, 10724835328 bytes, 20946944 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

#

# ls -l /dev/sd[de]

brw-rw---- 1 root disk 8, 48 Jan 29 11:05 /dev/sdd

brw-rw---- 1 root disk 8, 64 Jan 29 11:05 /dev/sde

# RAID [ Partition ]

# parted /dev/sdd

GNU Parted 3.4

Using /dev/sdd

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) unit GB

(parted) mklabel gpt

(parted) mkpart primary

File system type? [ext2]? ext4

Start? 0%

End? 100%

(parted) set 1 raid on

(parted) p

Model: VBOX HARDDISK (scsi)

Disk /dev/sdd: 10.7GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 0.00GB 10.7GB 10.7GB ext4 primary raid

(parted) quit

Information: You may need to update /etc/fstab.

# parted /dev/sde

GNU Parted 3.4

Using /dev/sde

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) unit GB

(parted) mkpart primary

Error: /dev/sde: unrecognised disk label

(parted) mklabel gpt

(parted) mkpart primary

File system type? [ext2]? ext4

Start? 0%

End? 100%

(parted) set 1 raid on

(parted) p

Model: VBOX HARDDISK (scsi)

Disk /dev/sde: 10.7GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 0.00GB 10.7GB 10.7GB ext4 primary raid

(parted) quit

Information: You may need to update /etc/fstab.

#

#

# ls -l /dev/sd[de]*

brw-rw---- 1 root disk 8, 48 Jan 29 11:12 /dev/sdd

brw-rw---- 1 root disk 8, 49 Jan 29 11:12 /dev/sdd1

brw-rw---- 1 root disk 8, 64 Jan 29 11:13 /dev/sde

brw-rw---- 1 root disk 8, 65 Jan 29 11:13 /dev/sde1

# RAID [ Array ]

# mdadm --create /dev/md1 --level=raid1 --raid-devices=2 /dev/sdd1 /dev/sde1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sde1[1] sdd1[0]

10474496 blocks super 1.2 [2/2] [UU]

[===>.................] resync = 19.1% (2001920/10474496) finish=0.7min speed=200192K/sec

md0 : active raid1 sdc1[1] sdb1[0]

10474496 blocks super 1.2 [2/2] [UU]

unused devices:

# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sde1[1] sdd1[0]

10474496 blocks super 1.2 [2/2] [UU]

[==========>..........] resync = 54.3% (5691584/10474496) finish=0.3min speed=203270K/sec

md0 : active raid1 sdc1[1] sdb1[0]

10474496 blocks super 1.2 [2/2] [UU]

unused devices:

#

# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sde1[1] sdd1[0]

10474496 blocks super 1.2 [2/2] [UU]

md0 : active raid1 sdc1[1] sdb1[0]

10474496 blocks super 1.2 [2/2] [UU]

unused devices:

#

# mdadm --misc --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Jan 29 11:15:26 2023

Raid Level : raid1

Array Size : 10474496 (9.99 GiB 10.73 GB)

Used Dev Size : 10474496 (9.99 GiB 10.73 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Jan 29 11:16:19 2023

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : nas:1 (local to host nas)

UUID : 60b7ba70:94ed3937:3942a50e:306e664b

Events : 17

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

# mdadm.conf

# mdadm --detail --scan >> /etc/mdadm/mdadm.conf

#

# vim /etc/mdadm/mdadm.conf

#

# cat /etc/mdadm/mdadm.conf

# mdadm.conf

#

# !NB! Run update-initramfs -u after updating this file.

# !NB! This will ensure that initramfs has an uptodate copy.

#

# Please refer to mdadm.conf(5) for information about this file.

#

# by default (built-in), scan all partitions (/proc/partitions) and all

# containers for MD superblocks. alternatively, specify devices to scan, using

# wildcards if desired.

#DEVICE partitions containers

# automatically tag new arrays as belonging to the local system

HOMEHOST

# instruct the monitoring daemon where to send mail alerts

MAILADDR root

# definitions of existing MD arrays

# This configuration was auto-generated on Tue, 09 Aug 2022 11:56:48 +0000 by mkconf

#ARRAY /dev/md0 UUID=6f52cdb8:efcbb936:e1a859a4:ef6e60ab

ARRAY /dev/md0 metadata=1.2 name=nas:0 UUID=6f52cdb8:efcbb936:e1a859a4:ef6e60ab

ARRAY /dev/md1 metadata=1.2 name=nas:1 UUID=60b7ba70:94ed3937:3942a50e:306e664b

#

initramfs update

# update-initramfs -u

update-initramfs: Generating /boot/initrd.img-5.15.0-58-generic

# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sde1[1] sdd1[0]

10474496 blocks super 1.2 [2/2] [UU]

md0 : active raid1 sdc1[1] sdb1[0]

10474496 blocks super 1.2 [2/2] [UU]

unused devices:

# LVM [ Physical Volume ]

# pvcreate /dev/md1

Physical volume "/dev/md1" successfully created.

# LVM [ Volume Group Extended ]

# vgextend share_vg /dev/md1

Volume group "share_vg" successfully extended

# vgdisplay -v

--- Volume group ---

VG Name ubuntu-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size <8.25 GiB

PE Size 4.00 MiB

Total PE 2111

Alloc PE / Size 2111 / <8.25 GiB

Free PE / Size 0 / 0

VG UUID VhSrss-EZMH-UllN-KJ3e-t01T-1pra-LE2cPa

--- Logical volume ---

LV Path /dev/ubuntu-vg/ubuntu-lv

LV Name ubuntu-lv

VG Name ubuntu-vg

LV UUID QcRHWC-mFve-QL2e-Exi4-C6j3-PKnR-AhJjuY

LV Write Access read/write

LV Creation host, time ubuntu-server, 2023-01-23 12:05:30 +0000

LV Status available

# open 1

LV Size <8.25 GiB

Current LE 2111

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Physical volumes ---

PV Name /dev/sda3

PV UUID 4PjQ16-zoHj-rdHz-nNRB-y3RE-veOF-OpBF0h

PV Status allocatable

Total PE / Free PE 2111 / 0

Archiving volume group "ubuntu-vg" metadata (seqno 2).

Creating volume group backup "/etc/lvm/backup/ubuntu-vg" (seqno 2).

--- Volume group ---

VG Name share_vg

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 2

Act PV 2

VG Size <19.98 GiB

PE Size 4.00 MiB

Total PE 5114

Alloc PE / Size 2557 / <9.99 GiB

Free PE / Size 2557 / <9.99 GiB

VG UUID dOSwDU-aF0S-DXsw-0dMt-7Coj-qZKB-3iPmPo

--- Logical volume ---

LV Path /dev/share_vg/share_lv

LV Name share_lv

VG Name share_vg

LV UUID tWiGhf-0pQ3-TLcO-XCco-747i-Kj1y-gu2Mox

LV Write Access read/write

LV Creation host, time nas, 2023-01-29 01:10:01 +0000

LV Status available

# open 1

LV Size <9.99 GiB

Current LE 2557

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

--- Physical volumes ---

PV Name /dev/md0

PV UUID MIB5IS-shvC-0upD-XtHA-DnHG-ZcvU-V6IKOJ

PV Status allocatable

Total PE / Free PE 2557 / 0

PV Name /dev/md1

PV UUID 3qr5un-Psa4-B31h-Jn11-H7qD-QgxW-OO58Rz

PV Status allocatable

Total PE / Free PE 2557 / 2557

#

# df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 198M 1.2M 197M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 8.1G 4.3G 3.4G 57% /

tmpfs 988M 0 988M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 1.7G 127M 1.5G 8% /boot

/dev/mapper/share_vg-share_lv 9.8G 24K 9.3G 1% /mnt/share

tmpfs 198M 4.0K 198M 1% /run/user/1000

#

LVM [ Logical Volume extend ]

# lvdisplay

--- Logical volume ---

LV Path /dev/ubuntu-vg/ubuntu-lv

LV Name ubuntu-lv

VG Name ubuntu-vg

LV UUID QcRHWC-mFve-QL2e-Exi4-C6j3-PKnR-AhJjuY

LV Write Access read/write

LV Creation host, time ubuntu-server, 2023-01-23 12:05:30 +0000

LV Status available

# open 1

LV Size <8.25 GiB

Current LE 2111

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/share_vg/share_lv

LV Name share_lv

VG Name share_vg

LV UUID tWiGhf-0pQ3-TLcO-XCco-747i-Kj1y-gu2Mox

LV Write Access read/write

LV Creation host, time nas, 2023-01-29 01:10:01 +0000

LV Status available

# open 1

LV Size <9.99 GiB

Current LE 2557

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

#

# lvextend -l +100%FREE /dev/share_vg/share_lv

Size of logical volume share_vg/share_lv changed from <9.99 GiB (2557 extents) to <19.98 GiB (5114 extents).

Logical volume share_vg/share_lv successfully resized.

# LVM [ Logical Volume Resize ]

# df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 198M 1.2M 197M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 8.1G 4.3G 3.4G 57% /

tmpfs 988M 0 988M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 1.7G 127M 1.5G 8% /boot

/dev/mapper/share_vg-share_lv 9.8G 24K 9.3G 1% /mnt/share

tmpfs 198M 4.0K 198M 1% /run/user/1000

#

# resize2fs /dev/share_vg/share_lv

resize2fs 1.46.5 (30-Dec-2021)

Filesystem at /dev/share_vg/share_lv is mounted on /mnt/share; on-line resizing required

old_desc_blocks = 2, new_desc_blocks = 3

The filesystem on /dev/share_vg/share_lv is now 5236736 (4k) blocks long.

#

# df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 198M 1.2M 197M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 8.1G 4.3G 3.4G 57% /

tmpfs 988M 0 988M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 1.7G 127M 1.5G 8% /boot

/dev/mapper/share_vg-share_lv 20G 24K 19G 1% /mnt/share

tmpfs 198M 4.0K 198M 1% /run/user/1000

#

reboot and check

# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sde1[1] sdd1[0]

10474496 blocks super 1.2 [2/2] [UU]

md0 : active raid1 sdb1[0] sdc1[1]

10474496 blocks super 1.2 [2/2] [UU]

unused devices:

# ls -l /dev/md*

brw-rw---- 1 root disk 9, 0 Jan 29 12:41 /dev/md0

brw-rw---- 1 root disk 9, 1 Jan 29 12:41 /dev/md1

#

# df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 198M 1.2M 197M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 8.1G 4.3G 3.4G 57% /

tmpfs 988M 0 988M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 1.7G 127M 1.5G 8% /boot

/dev/mapper/share_vg-share_lv 20G 24K 19G 1% /mnt/share

tmpfs 198M 4.0K 198M 1% /run/user/1000

#